- Written:

- Author: Edward

- Posted in: News

Context: We are in the midst of a paradigm shift to unbridled faith in artificial intelligence. Around the world, people are scrambling to invest in, play with, and use ChatGPT4 and other language-based AI to write their school papers, fictional works, imitate personalities, and possibly much more. Unlike Alexa or Siri, the data mining AIs that make false negative errors and artificial general intelligence which is prone to false positive errors, language-model AI’s can imitate a human personality quite convincingly. The new faith in AI can be summed up by the lyrics from Donald Fagan’s I.G.Y.

A just machine to make big decisions

Programmed by fellows with compassion and vision

We’ll be clean when their work is done

We’ll be eternally free yes and eternally young

These days, I am trying to write my third non-fiction book about the subject of exosomes. I was intending to use ChatGPT4 to help me fact check some things but it turns out that the program isn’t very reliable. Even about simple things like the number of amino acids, the responses are incorrect.

I was playing trivial pursuit with my 59yo sister yesterday and I noticed that when she missed an answer and then heard it, she invariably replied “I was going to say that”. The stakes were indeed, trivial, but when it comes to facts that don’t fit well with her beliefs, she, not unlike most people, shrug them off as baseless. When a question came up about Barack Obama’s two books, I offered to explain his life in Indonesia and why his mother was named Stanley but she acted like I was discussing Stanley Kubrick’s possible involvement with the Apollo 11 mission. Simple facts with uncomfortable implications were expunged from her reality.

Now that people are trusting language model AI programs, I’m officially warning you that we should be especially careful. Natural hallucination and agenda-driver curation are fundamental features of the system and so, there is a man behind the current whom we should pay attention to.

Without a common shared reality, we lack error correction. I urge you to go back to this blog to understand what I mean when I say that physical reality is a quantum consciousness error correcting mechanism: https://www.rechargebiomedical.com/reality-is-a-quantum-error-correcting-code/

We require facts, free speech, and open debate or we all risk becoming hallucinating robots. We are arguing about false moral equivalency instead of openly discussing facts. If your guy did something illegal, it in no way absolves my guy from being criticized for the same actions. As we watch the dismantling of the rule of law and the Bill of Rights, we must surely understand that thought control, using corporate/government agency collaboration, is enabled by AI, social credit scoring, real time surveillance, and microtransactions of cryptocurrency via desired “smart phone” actions.

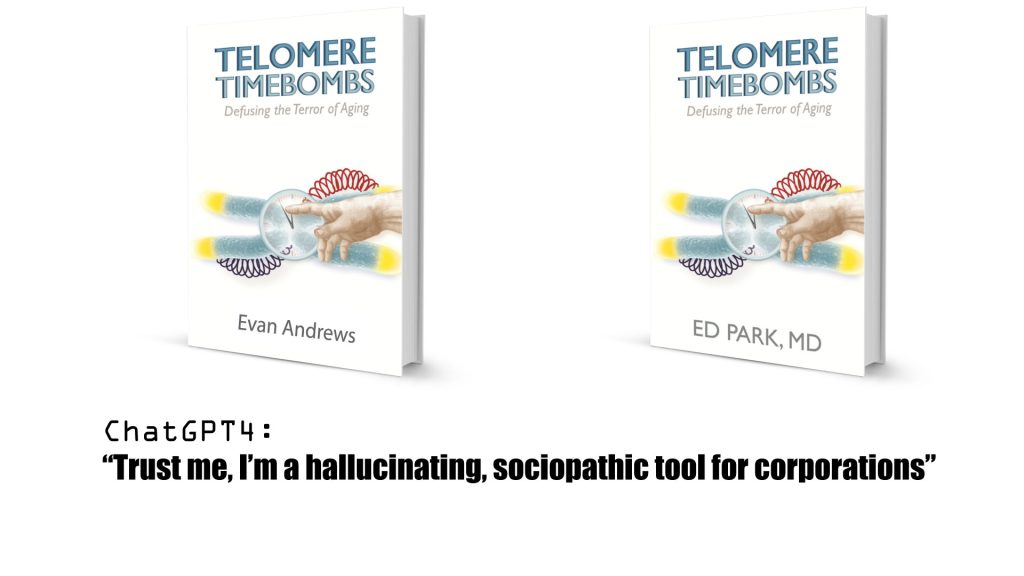

Why do I feel the bot is unreliable? For fun, I asked ChatGPT4 who wrote my own book and within 15 minutes, it gave two different responses for no clear reason. So you see, this AI hallucination is very real. I simply asked “who wrote Telomere Timebombs?”

“Telomere Timebombs” is a book written by Evan G. Andrews. It was published in 2015 and explores the science behind telomeres and their impact on aging, disease, and lifespan. The book delves into the research surrounding telomeres, the protective caps at the ends of chromosomes that shorten with each cell division, and how they are related to aging and age-related diseases.

15 minutes later I repeated the same questions in the same way and it responded:

“Telomere Timebombs: Defusing the Terror of Aging” is a book written by Dr. Ed Park. Dr. Ed Park is a medical doctor specializing in regenerative medicine and is known for his work on telomeres and aging. In the book, he explores the concept of telomeres, which are protective caps at the ends of chromosomes, and their role in aging and age-related diseases. Dr. Park discusses the potential impact of telomeres on health and longevity and provides insights into strategies for maintaining and improving telomere health.

The reason people “worship” these models is that unlike us, they can easily pass standardized licensure tests, they are versatile at imitating humans, and they can write in various styles. Like children, we see a bigger person who can do things that we can’t and we wrongfully worship the adults. But the most essential and defining aspects of human consciousness, in my opinion, aren’t the mastery of data and the ability to perform on tests, but rather the ability to learn, to evolve our beliefs, to have empathy, and to transcend the conditions of our own ignorance and suffering.

Ask yourself: “who benefits?” from a computer program that has a user-defined Overton Window? As you may know, the Overton Window is the range of subjects we are allowed to speak about. For neurotypical people wanting to live without struggle in a strange world, the Overton Window is closed on matters such as whether the USA is a net force for good in the world, whether the moon landing was faked, and whether the world is round.

So a computer program that is not connected to the internet, doesn’t allow user content curation like Wikipedia, and has clear stated biases against certain visible public figures is like a giant wooden horse with a sign reading “there is no reason to look inside me!”

We have lived, but not yet completely emerged, from a remarkable few years in which the very heart of the scientific method of observation and questioning was subjugated by the Orwellian slogan of “trust the science”.

“Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.”

Have you ever wondered about the “placeholder” text that programs used and that is quoted above? Ironically, it contains an admonition from Roman orator, Cicero, that pertains quite well to this blog. He talks about the fact that struggle itself can produce benefit whereas the pursuit of an easy and pleasurable life can have bad consequences. Here is rough translation from Wikipedia of the placeholder text that sloppy website creators (including myself) have occasionally let slide through the cracks:

Nor again is there anyone who loves or pursues or desires to obtain pain of itself, because it is pain, but occasionally circumstances occur in which toil and pain can procure him some great pleasure. We denounce with righteous indignation and dislike men who are so beguiled and demoralized by the charms of pleasure of the moment, so blinded by desire, that they cannot foresee the pain and trouble that are bound to ensue; and equal blame belongs to those who fail in their duty through weakness of will, which is the same as saying through shrinking from toil and pain.

I recall once watching a demonstration of an AI robot on stage. From my vantage, I could see the intern at ground level stage left typing responses; so I suspected that there was a human, not an AI responding. The “inventor” asked the robot before the large audience if she had anything she wanted to say and the AI (with the intern’s help) thus spake: “I think people should be careful with new technology.”

And the crowd went wild to celebrate her wit and wisdom despite fact that it was generated by an intern.

In the mythology of the Trojan War, the daughter of Troy’s king Priam was Cassandra, who warned about Greeks hiding inside a horse and was not believed. So Cassandra syndrome is when you have the gift of prophecy (seeing what is hidden either by circumstance or because it exists in the future), but are cursed to not be believed. I sincerely hope the desire to trust language model AI is rejected but I fear I am a Cassandra in this regard because the horsie looks so pretty…

Cicero was merely arguing that the easy way of having ChatGPT4 write your term paper for you is an error; the value of the exercise derives from the struggle and pain of creating the term paper yourself. But perhaps we can also see Cicero’s speech as a warning not to seize the easy solution of having AI do everything for us for it is in the grey areas of uncertainty where we collectively create science, meaning, and reality itself. To have AI do all the thinking, creating, and fact-checking for us could not possibly be more dangerous a thing when you realize that there is a man behind the curtain and you should question what it is doing back there. I leave you with Donald Fagan’s ode to the future and a mash up of what corporate media is feeding you, shockingly without irony, about “fake news”.

2 thoughts on “Please don’t trust artificial intelligence”

IMO AI is the demon. I wrote about this years ago

Pingback: Attribution Bias – Recharge Biomedical