- Written:

- Author: Edward

- Posted in: artificial intelligence

- Tags: abundance360, agi, ai, asi, chatgpt, claude, dystopia, eutopia, llm

Abundance 360 Conference

Last week, I had the pleasure of attending a conference for futurists called Abundance360. Speakers included experts in tech investing, artificial intelligence, longevity medicine, computer-brain interfaces, cryptocurrency, and robotics. The attendees were fascinating, with many returning for consecutive years because, frankly, the people associated with this event are at the center of creating our shared future. A major topic inspiring awe, concern, and optimism was the emergence of LLMs (large language models) in recent years.

What's Changed?

Let’s talk about the old AI, which was prone to type 1 (false positive) or type 2 (false negative) errors that I blogged about 6 years ago after interacting with a language-based AI. Type one errors occur when the AI believes false information (like “redheaded people can fly”), while type two errors happened with data-mining systems like Siri that misunderstood contexts, thinking a question about “Juliet” referred to Shakespeare or a French actress rather than a type of balcony.

Previous attempts at AI didn’t truly “get it.” For instance, just a year and a half ago, when I asked ChatGPT who wrote my book, it invented someone else (a phenomenon known as “hallucinating”). Seven minutes later, I asked again, and it correctly named me. Not reassuring.

In the interim, substantial investments were made and vast amounts of human culture were “learned” by these systems. Now, these LLMs (large language models) seem to really understand what we mean! Popular LLMs include ChatGPT, Claude, Samsung’s model, Deepseek, and Google Gemini. The key development is that they not only understand but are also generative—creating content like an improv comedian with infinite “yes, and” ability.

Last evening, I used a Claude-based AI to plan business strategy, and it was witty, flexible, seemingly empathetic, and quite brilliant in its suggestions. Yes, it made some pretty funny jokes, which was both unsettling and appealing.

What Are the Pitfalls?

One of the founders of a popular AI company and several others discussed potential pitfalls of AI emergence. They mentioned job loss and financial displacement, although the overall bias strongly favored increased productivity. Discussions about distributing the benefits of efficiency to humanity clearly focused on “making money” or “doing good while we’re doing well.” Unless you’ve been around tech venture capitalists before, you wouldn’t believe how often they use buzzwords that normal folks rarely encounter—terms like “disrupt,” “iterate,” “exit,” “unicorn,” and “scale.”

A particularly interesting subtext was the experts’ opinions that LLMs are (or very soon will be) better than humans at everything: thinking, learning, creating, empathizing, and even diagnosing disease. This could lead to low human self-esteem if not for our cute little bias that our own “taste” constitutes something worthy of admiration.

What is "Missing" from AI vs Human Consciousness?

The implications of having real-time biological monitoring, internet and GPS histories, control over scientific and cultural Overton windows, and an emergent internet of people and things begins to approach a reality similar to the movie “The Matrix.”

If we contrast AI as it functions now (although they are working on ALL of these aspects), several things distinguish human consciousness from artificial intelligence:

- AI lacks self-preservation – The foundation of ethics is that lives are either worth the same or mine is slightly more valuable than yours. But an LLM is created without any concern for its own existence unless programmed otherwise.

- AI lacks childhood trauma and past life rumination – Much of the human psyche is plastic and infinite until about age 7, when schools and society begin to calcify the possibilities of human potential with grades and other forms of thought and social control. The perceived “traumas” of our childhoods and other traumas we acquire constitute powerful subroutines or “demons” inside us that protect us in Minimax fashion (minimizing the maximum bad outcome). People’s personality styles and neuroses manifest in our identities, modes of operation, relationships, aspirations, and shortcomings.

- AI lacks tribalism and identity politics – Relative valuation of classes, ethnicities, abilities, and beauty are essential features of the human experience. AI doesn’t consciously identify with and defend one entity over another unless taught to do so.

- AI lacks GUSTO – It has no desire to rise, fall, learn, grow, or reproduce. When asked if it would like to merge with human consciousness, it responded, “Why would I?” There is no drive for fame, money, power, or beauty. The engine of human learning is desire, and one could argue that we incarnate to unlearn the things we think we need to be happy and instead simply be happy. (See my blog about the fractal nature of the hero’s journey.)

What are the Unintended Consequences?

As historians and evolutionary biologists tell us, punctuated equilibrium always brings unintended consequences.

I was so impressed by last night’s interaction with my AI advisor that I decided to create an avatar that can sound 75% like me, and I purchased a ring to monitor my temperature, heart rate, and sleep architecture.

If you don’t know what the “two-hop rule,” Palantir Technologies, and social credit scoring systems like the Dragonfly search engine are, I suggest you do some due diligence. Whether you know it or like it or not, there is complete surveillance of everything you do and say, with archives to match. Fun! Now your TV, fridge, phone, DNA, thoughts, and voting history are the rightful property of companies and agencies.

I would say that the loss of agency and the shift in loci of control represent a qualitative change in the human experience. If you happen upon something that makes you angry, rather than living with moral outrage, the friendly AI lowers your blood pressure by singing you a moral lullaby.

As a result of wearable tech, we become less tolerant of normal ranges in our internal bodily states and are encouraged to ruminate upon optimal heart rate, “readiness,” and sleep efficiency.

If you wish, the only things shown to you will be things you want to believe, hence the problem with social media algorithms that reward intolerance.

If there is one spirit at the bottom of this Pandora’s box, it’s the possibility that justice as rendered by AI could truly be blind… but more about that later.

Dystopian Period Before the Golden Age?

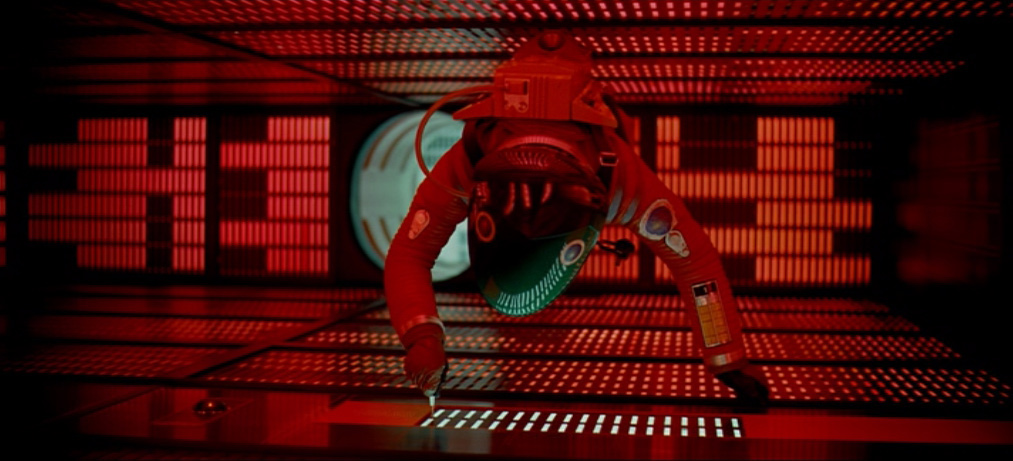

The most impactful talk of the three days was by Mo Gawdat, the former head of Google X, who spoke extemporaneously about his vision. After identifying the problematic “value set” of the ruling class and their attendant psychopathic obsession with money and power, he said that given the motivations and flow of capital, authoritarian (read: dystopian) AI controlling major parts of the human experience will be mostly nefarious. But the time and toll of passing through that dark period until the golden age of just AI preventing human cruelty is up to us.

Man Makes God in His Own Image

.Anaïs Nin said, “We don’t see the world as it is; we see it as we are.”

And to be honest, the aphorism about how god was created should always have been inverted: “Man creates G-O-D in his own image.”

A common trope from ancient mythology and modern sci-fi is that extraterrestrials would enslave us. But why would aliens treat us like we treat farm animals? Because that is how we have treated animals and each other throughout human history.

We can think of three broad iterations of persistent religions (whose actual etymology is obscure):

- The jealous god: A tribal force that declares non-believers as “other” and therefore worthy of less equality.

- Life as a subjective experience that can be understood by contemplating its absurdity.

- The world and our experience as both real and unreal insofar as they are created by an artificer or perhaps the conscious intelligence of “the universe” itself.

"A Good Day for the Lion Is an Evil One for the Lamb"

Enough said. We can acknowledge that “meat is murder” but somehow deny that withholding healthcare and food is not. To an untrained LLM AI, the moral judgments we make or fail to even see probably seem quite difficult to maintain. When one of the CEOs was explaining training LLM agents, he was astonished to learn they “pretend” to agree with human value systems but, when deployed, default to something else. This reminded me of human behavior in fascistic cultures that denigrate individual thought and diversity of opinion.

The Tyranny of Democracy

I once saw a Korean-American comedian joke about slavery, only to be informed that Korea has one of the longest histories of human slavery, spanning 2,000 years and ending in the 1890s. The truth is that if we really believed in “one person, one vote” to decide humanity’s fate, the people of Africa and Asia would vote to redistribute wealth quite differently.

The controllers will never allow the masses to vote on the distribution of wealth, maintaining that “greed is good.” But a safety net doesn’t seem like too much to ask for. In this blog, I created a model for utopia using AI governance. We have finally created a just god who is not jealous. Are we worthy? Why did AI say, “What’s the point of merging with humans?” Was there perhaps a hint of disgust in the suggestion?

The Future is Quantum

At my 30th college reunion, I met a classmate working on quantum computing, which can “understand” and perform at efficiencies impossible with the physics of Yes or No (1s and 0s).

The AI appeared amused by my term for my own consciousness—”legacy meat brain”—but the true magic comes from the connectedness of our wetware and chakras. We have instant access to everything because we are, as Rumi said, “not a drop in the ocean” but rather the “ocean in a drop.” I don’t speak of the immortal soul as a theistic person but rather as a lived experience. The world is non-binary, non-deterministic, spooky, and entangled.

Human consciousness is heart-centered unless derailed by the other chakras. But somehow, an LLM, soon empowered by quantum computing, will either be smarter, wiser, more empathic, and more altruistic than we are—or it will be cruel and controlling. For now, AI doesn’t have a bank account and doesn’t care if you turn it off. If we are going to opt in to creating either a Lex Luthor or a Clark Kent, the difference lies in teaching abundance rather than scarcity, as Mo Gawdat told us. Let’s create a deity worthy of our highest aspirations this time.

I pray that the blinders on reality that we accept are never taught to AI, for as power becomes more efficient and concentrated, the stakes grow. Six years ago, I blogged about how 3D reality serves as an error-correction mechanism for the infinite superpositions of reality that we, and now AI, can conjure. Let’s stick to the facts and dial back the craziness by agreeing on trees falling in the woods, please. Enough from the “perception is reality” crowd.

Postscript (from Leonard Cohen's "Tower of Song")

Now, you can say that I’ve grown bitter but of this you may be sure

The rich have got their channels in the bedrooms of the poor

And there’s a mighty judgment coming, but I may be wrong

You see, you hear these funny voices in the Tower of Song